Google offers non-Pixel owners a way to avoid waiting on hold with latest test

[ad_1]

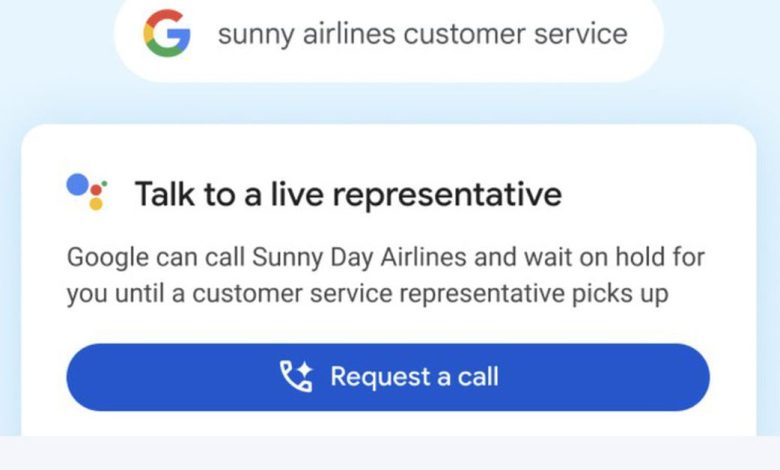

Google is testing a new “Talk to a Live Representative” feature that allows the company’s software to call a business on your behalf, navigate through its phone tree, wait on hold, and then notify you when there’s a real human being available for you to speak to. The test, which is available now as a Search Labs experimental feature, was spotted by X user Sterling.

As 9to5Google notes, the feature sounds a lot like the Pixel’s “Hold for Me,” but with a couple of key advantages. First, it’s available across all phones rather than just the Pixel 3 and newer. And second, “Talk to a Live Representative” offers to actually proactively call a business on your behalf, rather than being something you enable once you’ve already placed a call and been placed on hold.

You access the feature by searching for a company’s customer support number, at which point Google will display a “Request a call” button if the company is supported, 9to5Google reports. Supported businesses include a selection of US airlines, telcos, retailers, insurance, and other service companies. You select the reason for your call, and Google will provide SMS updates on its progress before calling you when there’s a rep available for you to speak to.

The experimental feature is available in the US via the Google app on iOS and Android as well as Chrome desktop, 9to5Google notes.

[ad_2]