Up 75% in a Year, This Artificial Intelligence (AI) Chip Stock Could Skyrocket Another 50%

[ad_1]

Investors on the hunt for stocks capable of taking advantage of the artificial intelligence (AI) chip boom probably don’t have Lam Research (LRCX 0.78%) on their radar. However, a closer look at the company’s prospects and the potential acceleration in its growth indicates that it really should be. This chip stock is on its way to capitalizing on a hot technology trend.

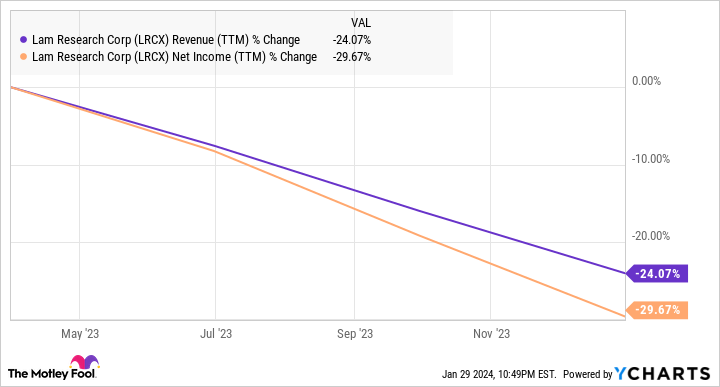

Still, a look at the stock’s performance of late shows that some investors are clued into its potential. Shares of Lam Research headed higher after the company released fiscal 2024 second-quarter results (for the three months ended Dec. 24, 2023) on Jan. 24. The stock now sits on impressive stock price gains of 75% in the past year. That may seem a tad surprising, considering the pace at which its revenue and earnings declined this past year.

LRCX Revenue (TTM) data by YCharts

What they likely are discovering is that Lam Research’s latest results indicate it is on the cusp of a turnaround. Let’s check out the reasons why this semiconductor company could sustain its impressive stock market rally.

A turnaround in semiconductor equipment spending will power Lam Research’s growth

Lam Research reported fiscal Q2 revenue of $3.76 billion, down 29% from the year-ago period. The company’s non-GAAP earnings shrunk to $7.52 per share from $10.71 per share in the same quarter last year. This sharp year-over-year decline can be attributed to weak spending on semiconductor equipment in 2023, especially for memory chips.

Semiconductor equipment spending was forecast to drop 15% in 2023 to $84 billion. The memory equipment market took a bigger hit as spending was down 46% last year. Lam Research gets 48% of its revenue from selling memory equipment, so the big decline in this market owing to an oversupply of memory chips weighed heavily on the company’s financial performance.

The good news is that Lam Research’s guidance for the current quarter indicates that its financial performance is set to improve remarkably. The company guided for $3.7 billion in revenue and $7.25 per share in adjusted earnings for the ongoing quarter, which will end on March 31. That points toward a smaller year-over-year decline of 4% in revenue, while the bottom line is set to improve from the year-ago period’s figure of $6.99 per share.

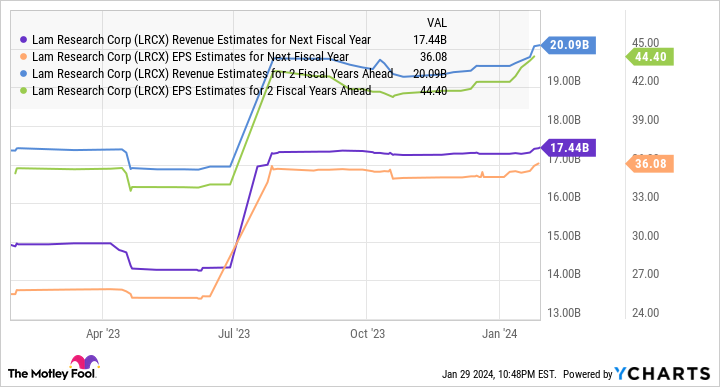

Analysts anticipate Lam Research’s full-year 2024 revenue to drop almost 16% to $14.7 billion. Its earnings are expected to shrink to $27.93 per share from $34.16 per share in the year-ago period. However, Lam’s top and bottom lines are expected to return to solid growth from the new fiscal year, which will begin in September 2024.

LRCX Revenue Estimates for Next Fiscal Year data by YCharts

It is easy to see why Lam’s guidance and analysts’ expectations point to sunny days ahead. Overall spending on semiconductor equipment is anticipated to jump 15% in 2024 to $97 billion. The memory equipment market, on the other hand, is expected to clock even faster growth of 65% in 2024, clocking $27 billion in revenue. The proliferation of AI in multiple markets is going to be a key factor driving the turnaround in spending on memory equipment in particular and semiconductor equipment in general.

AI adoption is going to be a key catalyst for the company

Lam Research management pointed out on the latest earnings conference call that they have “secured additional advanced packaging wins for high-bandwidth memory, which is critical for enabling advanced AI servers.” It is worth noting that the demand for high-bandwidth memory (HBM) has been increasing rapidly to speed up the process of transporting data to AI processors.

Gartner estimates that HBM bit demand could grow eightfold between 2022 and 2027. That’s not surprising as major AI-focused chipmakers such as Nvidia (NASDAQ: NVDA) integrate more HBM into their AI chips. Nvidia’s flagship H100 AI processor, for example, carries 80 gigabytes (GB) of HBM. However, the company has been focused on adding bigger HBM into its chips to maintain its competitive advantage.

For instance, Nvidia’s recently announced GH200 Grace Hopper Superchip comes with a massive 282GB of HBM. Meanwhile, Nvidia’s upcoming H200 AI processor, which is the successor to the H100, is expected to pack 141GB of HBM. Not surprisingly, Nvidia has reportedly made huge payments of $1.3 billion to memory makers such as SK Hynix and Micron Technology (NASDAQ: MU) to secure more HBM supply.

This explains why Micron pointed out on its December earnings call that it will be supplying HBM for Nvidia’s Grace Hopper GH200 and H200 platforms starting this year. The memory specialist has already started the production ramp of its latest generation HBM chips and expects to “generate several hundred millions of [sic] dollars of HBM revenue in fiscal 2024.” Even better, Micron says that HBM demand should continue to grow in 2025 as well.

On the other hand, Micron says that the adoption of AI in smartphones and personal computers (PCs) will create the need for more memory chips. That’s because each AI-enabled smartphone or PC may need an additional 4GB to 8GB of DRAM per unit. As such, the major factor that was weighing down Lam Research — weak memory demand and oversupply — should not be a problem for the company going forward.

We have already seen that analysts anticipate a nice jump in Lam’s revenue and earnings over the next couple of fiscal years. Assuming Lam Research does hit $44 per share in earnings in fiscal 2026 and maintains its forward earnings multiple of 29 at that time — which is in line with the Nasdaq-100‘s forward earnings multiple — its stock price could increase to $1,276 in just over two years.

That represents a 50% jump from current levels. So, investors can still consider buying Lam Research as this AI stock’s rally seems set to continue thanks to improving conditions in the memory market, which will eventually lead to robust growth in the company’s revenue and earnings.

Harsh Chauhan has no position in any of the stocks mentioned. The Motley Fool has positions in and recommends Lam Research and Nvidia. The Motley Fool recommends Gartner. The Motley Fool has a disclosure policy.

[ad_2]